Achieving consistent growth in a highly competitive mobile app market is no easy feat, especially when it comes to accurately measuring the true impact of your marketing campaigns. Marketers often pour resources into acquisition and retargeting efforts, only to be left wondering: Did this campaign really move the needle, or would these users have converted anyway?

This is where incrementality testing becomes essential. By isolating the true lift generated by your efforts, incrementality testing provides a clear view of what’s working—and what’s not—helping you optimize spend and maximize returns.

If you’re looking to scale your campaigns efficiently, understanding and implementing incrementality testing is key.

What’s the meaning of Incrementality?

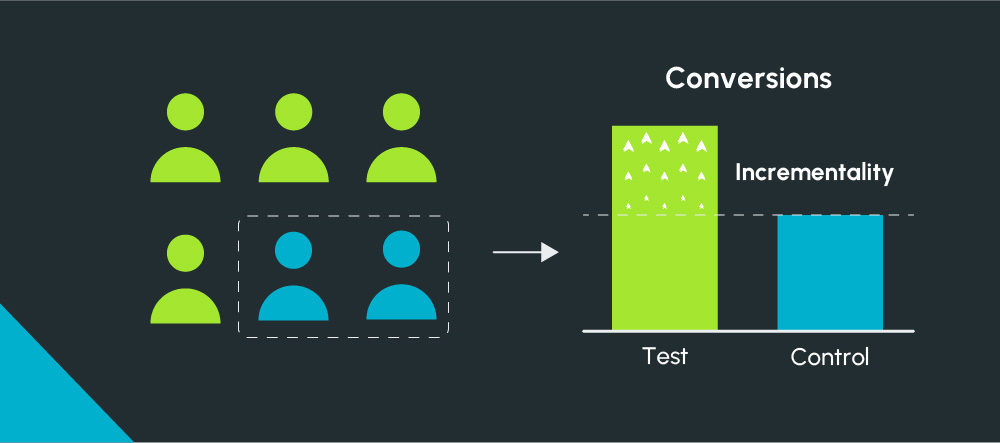

Incrementality testing is a powerful measurement methodology used by growth marketers to determine the true effectiveness of their marketing campaigns. By carefully segmenting audiences into test and control groups, marketers can analyze the additional value generated by their efforts—increased app installs, user engagement, or revenue.

This approach allows marketers to isolate the specific impact of a campaign, ensuring that they understand what drives genuine growth versus what may be simply attributed to organic trends.

With incrementality testing, you can make data-driven decisions that enhance your marketing strategies, ultimately leading to more efficient resource allocation and improved ROI. Understanding this concept is essential as we delve into how it compares to other testing methods, such as A/B testing.

How are incrementality experiments different from A/B experiments?

Incrementality experiments and A/B experiments are both essential methodologies in the toolkit of growth marketers, yet they serve distinct purposes and provide different insights into campaign performance.

The primary focus of incrementality experiments is to determine the true causal impact of a marketing activity. These experiments aim to isolate the effect of a specific campaign or intervention by comparing the behavior of a test group, which is exposed to the marketing activity, with a control group, which is not.

The goal is to identify the incremental lift generated by the campaign—essentially answering the question: What additional conversions or revenue can be attributed to this marketing effort?

In contrast, A/B experiments (or split tests) are designed to compare two or more variations of a single element within a campaign—such as ad creatives, landing pages, or email subject lines—to see which performs better. A/B testing focuses on optimizing individual components rather than isolating the overall impact of an entire campaign.

The need for incrementality testing in retargeting campaigns

Incrementality testing in retargeting is a critical method for determining whether a retargeting campaign is actually driving incremental results, such as conversions or revenue, beyond what would have occurred naturally without the campaign.

Retargeting campaigns can be tricky because they target users who are already familiar with your app. Some of these users might return to your app or convert on their own, even without seeing your retargeting ads. Without incrementality testing, it’s easy to misinterpret the campaign’s impact, leading to inaccurate attributions.

For example, a user who previously installed your app may have intended to make an in-app purchase in a few days, whether they saw your retargeting ad or not. Incrementality testing helps marketers measure whether the retargeting ad was the decisive factor in driving that purchase, or if the user would have converted anyway.

Methodologies to conduct incrementality testing

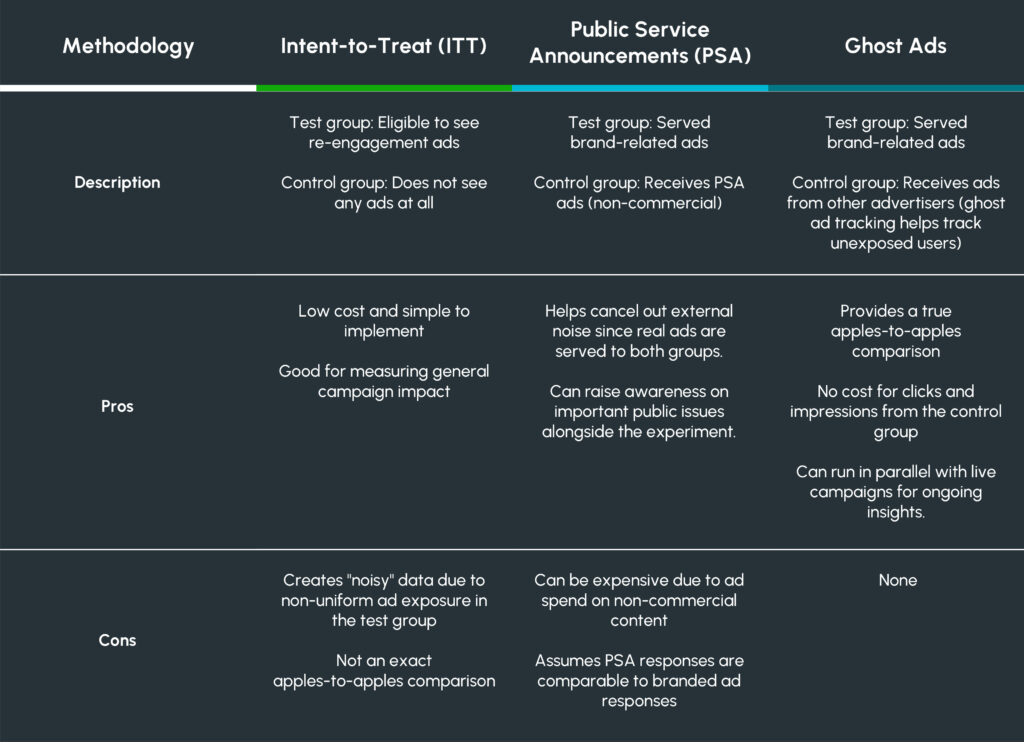

For incrementality testing, the following methodologies help marketers compare test groups exposed to ads with control groups that receive either no ads or alternative content. Here are three common approaches:

Intent-to-Treat (ITT)

In this method, users are divided into a test group eligible to see re-engagement ads and a control group that sees no ads. It helps evaluate the overall impact of the campaign by tracking outcomes in both groups.

Public Service Announcements (PSA)

The test group is served the brand’s ads, while the control group is shown public service announcements. This method provides a more “realistic” comparison since both groups are exposed to ads, reducing external noise.

Ghost Ads

In this method, the test group is served brand ads, and the control group receives ads from other advertisers. By tracking which users in the control group would have seen the ads, this approach offers a true “apples-to-apples” comparison of exposed vs. unexposed users.

Best practices for incrementality testing and common pitfalls

To make the most of incrementality testing and avoid common pitfalls, mobile app marketers should follow these best practices:

1. Set clear, specific goals

Before conducting any incrementality test, define what you’re measuring. Are you looking to increase app installs, boost in-app purchases, or reduce churn? Setting measurable goals also helps in designing the test and identifying the right KPIs to track.

Running incrementality tests without clear goals can lead to unfocused results and wasted resources. You may end up collecting data that isn’t actionable, leaving you unsure of what decisions to make based on the test outcome.

2. Carefully select control groups

One of the most important aspects of incrementality testing is the control group—a group of users who do not see the ads or marketing campaigns. The key is to make sure that the control group is representative of your target audience and is completely unexposed to the marketing efforts being tested.

If the control group is not properly selected or is exposed to some parts of your marketing campaign (even inadvertently), the results will be inaccurate. This can lead to false conclusions about the campaign’s effectiveness, either overstating or understating the incremental impact.

3. Ensure large enough sample sizes

For accurate, statistically significant results, you need a large enough sample size. Incrementality testing, unlike A/B testing, measures long-term effects and involves more complex analysis, so the larger your test and control groups, the better.

A small sample size increases the risk of random noise affecting the results, leading to incorrect conclusions. Budget constraints or time limitations may be the reason for a small sample size. However, this can result in inconclusive or skewed results.

4. Account for external factors

External influences—such as seasonality, promotions, app store rankings, or even competitor activity—can have a significant impact on your app’s performance. If the test runs during a high-traffic season, like the holidays or sales like Black Friday, or while you’re running an app store promotion, the results may be skewed by factors beyond the marketing campaign itself.

Neglecting external factors can lead to over-optimistic or inaccurate conclusions about the effectiveness of your campaigns. Always compare your results against any external trends that may have influenced user behavior during the test period.

5. Measure long-term impact

Incrementality testing often requires longer timeframes than traditional A/B testing because it measures true campaign lift over time. Especially for mobile apps, user behavior can vary significantly over the course of days or weeks, and testing too quickly may result in misleading insights.

Running tests for a short duration may not capture the full incremental value of a campaign, especially in cases where user engagement or revenue accumulates over time.

6. Regularly validate and reassess campaigns

Incrementality testing is not a one-time activity. As user behavior and market conditions evolve, the effectiveness of your campaigns will also change. It’s important to regularly validate and reassess the incremental impact of your marketing efforts to ensure continued optimization.

Marketers often assume that once a test is complete, the insights are valid indefinitely. However, give how dynamic the mobile app market is, and what works today may not work tomorrow.

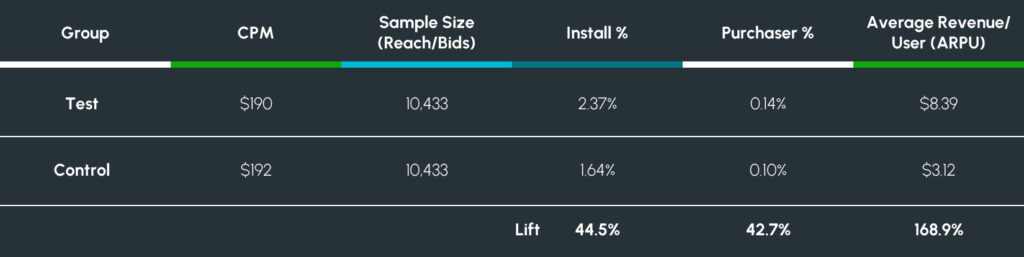

Case Study: Social Casino Gaming App Implements Ghost Ad Testing

A social casino gaming client wanted to experiment with their retargeting campaigns on Android by exploring a new segment of users with lifetime value purchases between $20-$80 and serving them with relevant video ads.

Testing group consisted of users of this category who were shown video ads versus the control group users in this category who were shown display, banner ads.

Aarki impressions were able to drive higher install rate, purchaser rate, and ARPU.

Unlock true campaign performance with incrementality testing

Understanding which campaigns drive genuine growth is no longer a luxury—it’s essential. Incrementality testing provides the clarity marketers need to distinguish between organic conversions and those directly influenced by their marketing efforts. You can uncover the real impact of your campaigns and make data-driven decisions that optimize your ad spend and maximize ROI.

But simply knowing about incrementality testing isn’t enough—effective implementation is critical. From selecting a suitable method to avoiding common pitfalls, incrementality testing can be complex, but it’s a game-changer for driving sustainable mobile app growth. Ready to see how incrementality testing can elevate your marketing performance? Get in touch with Aarki today and start running smarter, more effective campaigns that deliver real results.